Image Source: Freepik

Complex statistical methods used in the Design of Experiments (DOE) are essential for sophisticated analysis in various fields. The DOE is a branch of applied statistics that deals with planning, conducting, analyzing, and interpreting controlled tests to evaluate factors that control the value of a parameter or group of parameters.

This method is compelling because it allows multiple input factors to be manipulated simultaneously, identifying their effects on a desired output. This approach is more efficient than the traditional “one factor at a time” method, as it can reveal important interactions between variables that might be missed otherwise.

This blog examines the efficiency and usefulness of these statistical methodologies in numerous experimental circumstances, emphasizing their significance in modern research and industry applications.

Advanced Statistical Techniques in Design of Experiments (DOE)

Image Source: Pexels

The field of Design of Experiments (DOE) leverages various advanced statistical techniques to investigate the complex relationships between input factors and their resultant effects on a given process or product. These techniques enable researchers and engineers to systematically plan, conduct, and analyze experiments, providing valuable insights into process optimization, quality improvement, and innovative solutions.

1. Factorial Designs

Factorial Designs are a cornerstone in DOE for their robust ability to evaluate multiple factors simultaneously. These designs are compelling because they allow for the investigation of the individual effects of each factor and the interaction effects between them. A full factorial design tests every possible combination of factors and their levels. This comprehensive approach provides a complete picture of the process.

Still, it can become impractical with many factors due to the exponential growth in the number of experiments required. Fractional factorial designs often address this, where only a subset of the possible combinations are tested, providing a more manageable yet informative approach. These designs are beneficial in the initial stages of experimentation, where the goal is to identify the most significant factors.

2. Response Surface Methodology (RSM)

Response Surface Methodology (RSM) is a more sophisticated technique focusing on modeling and analyzing problems where several variables influence several variables influence the quality characteristics (response). The primary goal of RSM is to find the optimal conditions for a desirable response, thus making it invaluable in optimization.

RSM involves using designed experiments to obtain an empirical response model, followed by statistical techniques to explore the relationships between the factors and the response. This approach is beneficial when the response is influenced by several variables and their interactions, making it a popular choice in product and process optimization scenarios.

3. Taguchi Methods

Developed by Genichi Taguchi, the Taguchi Methods are a form of DOE that emphasizes robust design and variance reduction. Unlike traditional methods focusing primarily on mean performance characteristics, Taguchi’s approach aims to enhance the product or process’s performance consistency through quality engineering. The key aspect of Taguchi Methods is the use of orthogonal arrays to study many variables with a minimal number of experiments.

Additionally, it incorporates the signal-to-noise ratio concept, which helps make the system or process more robust against external noises. These methods have been widely adopted in engineering and manufacturing to improve product quality and reduce costs associated with poor quality.

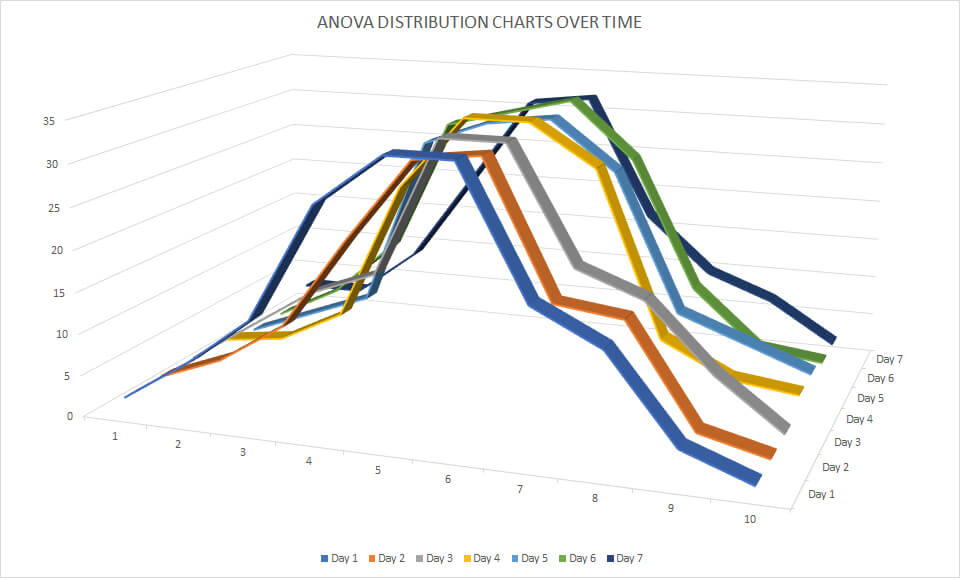

4. Mixed-Model ANOVA (Analysis of Variance)

Mixed-Model Analysis of Variance (ANOVA) is an advanced statistical technique used in DOE for experiments involving fixed and random factors. Fixed factors are those whose levels are set by the experimenter and are of primary interest, such as different treatments or conditions. Random factors, on the other hand, represent random sources of variability, like batch-to-batch differences or different operators.

Image Source: Wikimedia Commons

Mixed-model ANOVA is beneficial for analyzing data from experiments interacting with these two factors. It helps in understanding not only the impact of the fixed factors but also how these effects might vary due to the random factors. This method is extensively used in industrial and agricultural experiments where random factors cannot be controlled, but their impact needs to be understood.

5. Covariance Analysis (ANCOVA)

Analysis of Covariance (ANCOVA) is a blend of ANOVA and regression. It is used in DOE to analyze the influence of one or more covariate variables (continuous predictors) that are not the primary focus of the study but could affect the outcome variable. ANCOVA adjusts the response variable for the covariates before testing the main effects of the factors of interest. This adjustment increases the statistical power by reducing the error variance.

For example, in an experiment testing the effect of a new teaching method, the pre-test scores could be used as a covariate to adjust the post-test scores. This technique ensures a more accurate and reliable analysis by controlling for potential confounding variables.

6. Box-Behnken Design

The Box-Behnken design is a type of response surface methodology that is particularly efficient for fitting quadratic models. This design is structured in a way that does not include combinations of factors at extreme levels (such as all factors at their highest or lowest levels). As a result, it requires fewer experiments than a full factorial design while still allowing for estimating the factors’ primary effects, interaction effects, and quadratic effects.

Box-Behnken designs are typically used when extreme conditions are impractical, unsafe, or likely to lead to undesirable responses. They provide a balanced approach with sufficient information for robust analysis, making them a popular choice in process optimization, where response variables must be modeled on a curved response surface.

7. Central Composite Design (CCD)

Central Composite Design (CCD) is a highly effective statistical technique in response surface methodology (RSM). It is particularly suited for creating second-order (quadratic) models for optimization purposes. A CCD consists of three experimental runs:

- Factorial or fractional factorial runs.

- Axial runs (which are at some distance from the center point along the axis of each design variable).

- Center runs (where all factors are set to their middle levels).

This structure allows for efficient estimation of the main effects, interaction effects, and quadratic effects of the factors. CCDs are commonly used when a more refined response surface model is necessary, especially in process optimization, where the relationship between the variables and the response is not linear.

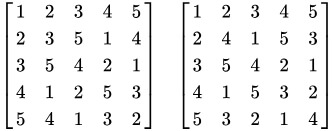

8. Latin Square Design

Latin Square Design is a specialized experimental design that simultaneously controls variation in two directions. This design is particularly beneficial in agricultural experiments where variables such as soil fertility and rainfall can affect outcomes.

Image Source: Wikipedia

Each row and each column of the square represents a level of one of the variables, and each treatment appears exactly once in each row and each column. This layout effectively controls for variation across rows and columns, allowing a more accurate assessment of the treatment effects. The Latin Square Design is a resource-efficient method, especially useful when two significant sources of variability need to be controlled.

9. Plackett-Burman Design

Plackett-Burman Design is employed primarily for screening many factors to identify the most important ones. It is particularly useful in the early stages of experimental design when the number of potential factors is large, and the goal is to narrow down these factors to the few that have significant effects.

Plackett-Burman designs are fractional factorial designs that allow for estimating main effects (ignoring interactions) using a minimal number of runs. These designs are unsuitable for exploring factors’ interactions or for detailed optimization. Still, they are highly efficient for determining which factors warrant further investigation in a limited number of experiments.

10. Sequential Experimental Designs

Sequential Experimental Designs are a dynamic approach in DOE where the design of an experiment evolves as data is collected and analyzed. This technique involves conducting a series of experiments where the results of one experiment inform the design of the next. The process begins with a preliminary experiment, often broad and exploratory, aimed at identifying important factors or trends.

Based on these initial findings, subsequent experiments are designed with a more focused approach, progressively honing in on the specific areas of interest. This iterative process continues until a satisfactory level of understanding or optimization is achieved. Sequential designs are particularly useful in complex systems where initial knowledge is limited, and adaptability is crucial for efficiently exploring the experimental space.

11. Generalized Linear Models (GLM)

Generalized Linear Models (GLM) extend the concept of linear regression models to accommodate response variables that are not normally distributed. GLMs are used in DOE to model relationships between a response variable and one or more predictor variables, where the response variable can follow different distributions such as binomial, Poisson, or exponential. This flexibility makes GLMs suitable for various data types and experimental situations.

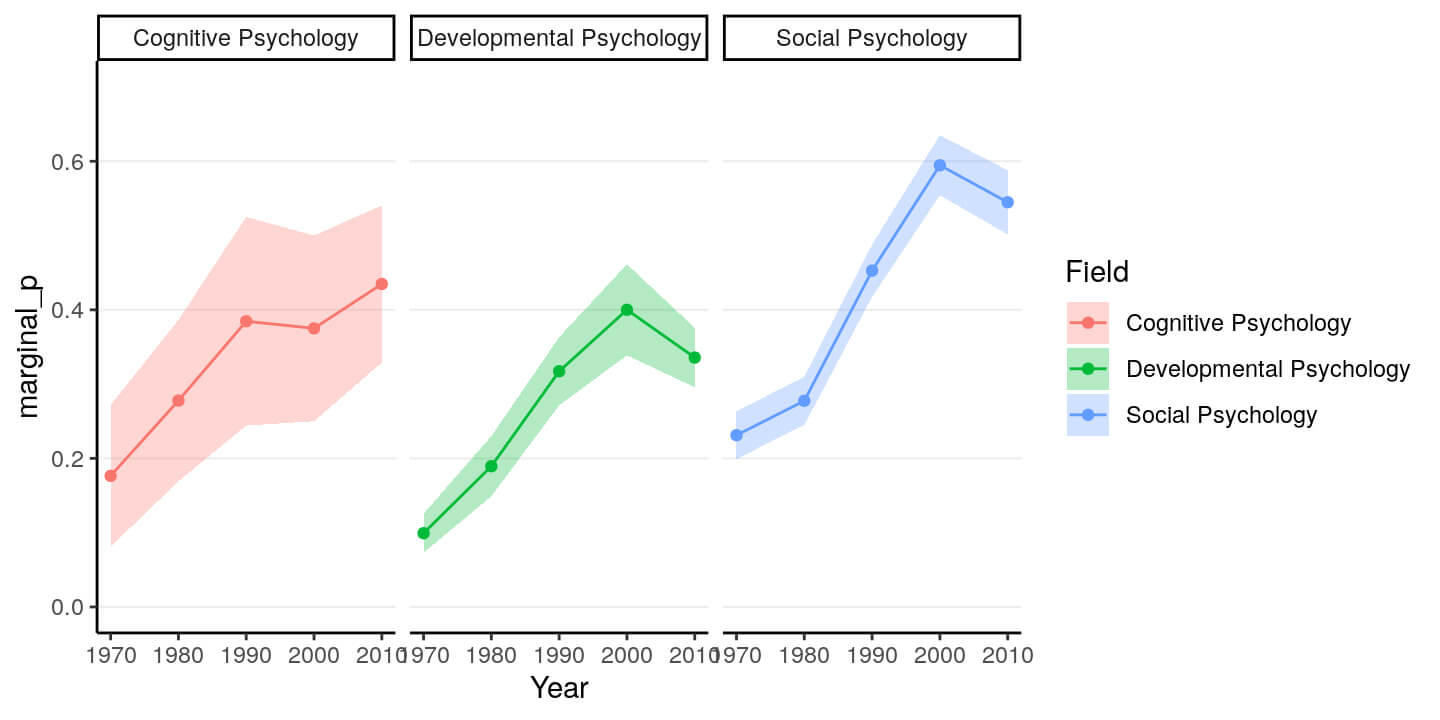

Image Source: Bookdown

GLMs consist of three components: a random component specifying the distribution of the response variable, a systematic component identifying the variables that predict the response, and a link function connecting the systematic component to the mean of the distribution. GLMs are instrumental in situations where the response variable’s relationship with predictors is non-linear or when the variance of the response is not constant.

12. Monte Carlo Simulation

Monte Carlo Simulation is a statistical technique used to understand the impact of risk and uncertainty in prediction and forecasting models. This method uses random sampling and statistical modeling to estimate mathematical functions and mimic the operation of complex systems. In the context of DOE, Monte Carlo simulations can be used to assess the robustness of an experimental design, predict outcomes under different scenarios, and estimate the effects of variability in factors on the response variable.

This approach is beneficial for dealing with complex systems where analytical solutions are difficult or impossible. By running many simulations with varying inputs, researchers can obtain a distribution of outcomes, which can then be analyzed to make probabilistic predictions and informed decisions.

Conclusion

Exploring advanced statistical techniques in the Design of Experiments (DOE) underscores their pivotal role in modern research and industry applications. These methodologies, ranging from Factorial Designs to Monte Carlo Simulation, offer a comprehensive toolkit for efficiently understanding and optimizing complex systems. They allow for the simultaneous manipulation of multiple input factors and reveal critical interactions and effects that might be overlooked in more straightforward, traditional approaches.

Image Source: Pexels

By implementing these cutting-edge methodologies, researchers and engineers can improve quality, stimulate creativity, and better understand process dynamics. The thoughtful application of these techniques represents a significant advancement above simple experimental procedures, allowing for a more profound and nuanced comprehension of a wide range of phenomena in various domains.

Enhance your expertise in the Design of Experiments with Air Academy Associates’ Operational Design of Experiments Course. This course offers an immersive learning experience to deepen your understanding and practical application of DOE methods. Ideal for professionals seeking to improve process efficiency and innovation, our course guides you through essential DOE concepts and real-world applications. Join us to take your skills to the next level and significantly impact your field.